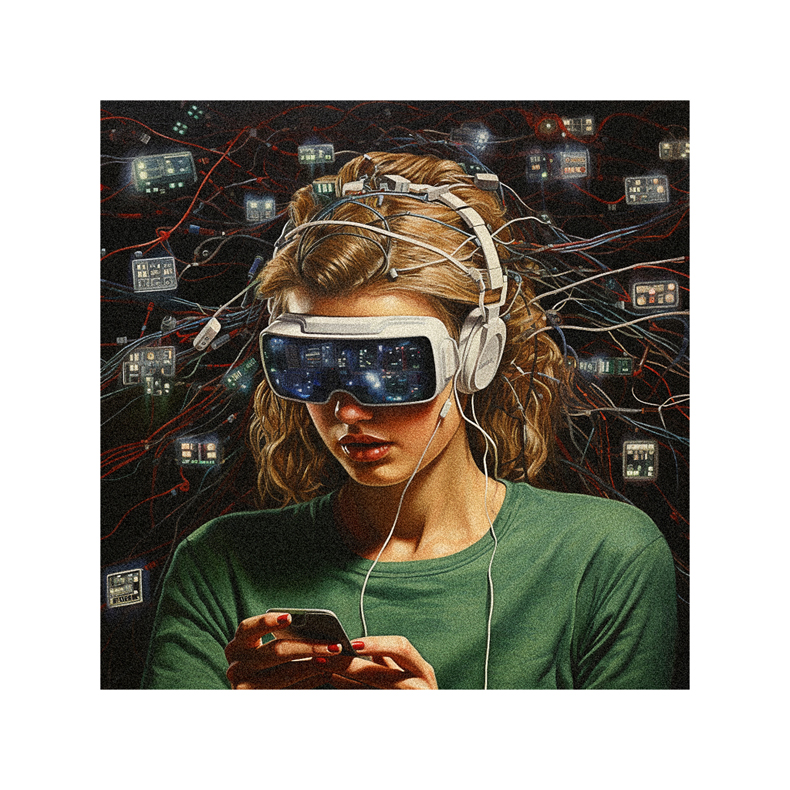

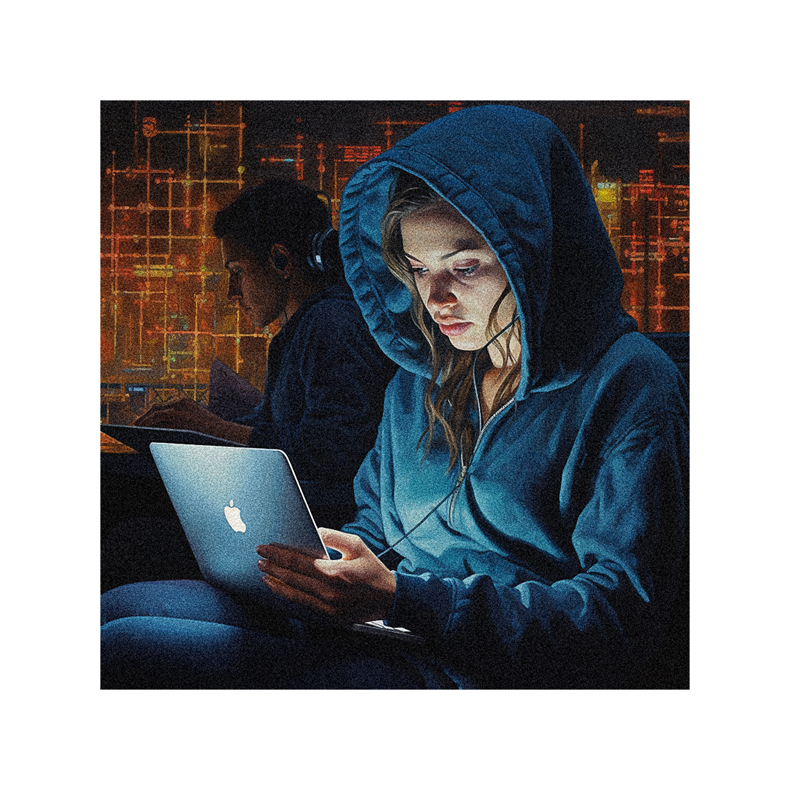

You’re an artist, but your canvas is as ethereal as the cloud. You’re creating art with algorithms, mixing hues of data and design.

But it’s not all pastel pixels. You’re delving into a world where art meets ethics, where creation clashes with controversy.

Are you ready to question, to explore, to redefine? Dive into the complex ethical considerations that swirl around tech-integrated art.

It’s about more than aesthetics; it’s about understanding the implications. Welcome to your brave new world.

Validity and Impact of Ai-Generated Art

You’re right amid a heated debate about the validity and impact of AI-generated art, especially with the recent backlash at the 2022 Colorado State Fair art competition.

Outraged voices argue it’s cheating, that AI can’t replicate the years of effort an artist puts into their craft. Yet, you can’t simply banish AI from the art sphere.

You need to learn to work with and around these AI tools as an artist. Noteworthy AI art-generating programs like DALL-E, Stable Diffusion, and Midjourney are gaining popularity. But they’re not without controversy, especially around the datasets used for training.

Lawsuits are emerging due to potential misuse and irretrievable access to images. Transparency and disclosure are paramount in this ever-changing landscape.

Potential Legal Issues With Ai-Generated Art

In your exploration of AI-generated art, it’s crucial to consider the potential legal issues that could arise, such as copyright infringement and misuse of personal images from social media. It’s essential to understand what datasets these AI programs are trained on. If they’re using your images, you should know whether you can revoke access or if misuse could result in legal action.

Transparency is key here; knowing how these algorithms learn and adapt can help avoid legal pitfalls. The unpredictability of AI adds another layer of complexity to the legal landscape.

Ethical Concerns About Stereotypical and Sexualized Portrayals in Ai-Generated Art

Diving deeper into the realm of AI-generated art, it’s essential to confront the issues of stereotypical and sexualized portrayals that some algorithms produce. It would be best to understand that these problems arise not from the AI but from the data used to train it. If the training set is biased, the AI’s output will reflect that bias. This issue extends beyond art, impacting the most vulnerable members of society.

As you navigate this field, consider how these biases might affect your work. Be vigilant about the data you use, and consider the ethical implications of your creations. Always strive for transparency and fairness.

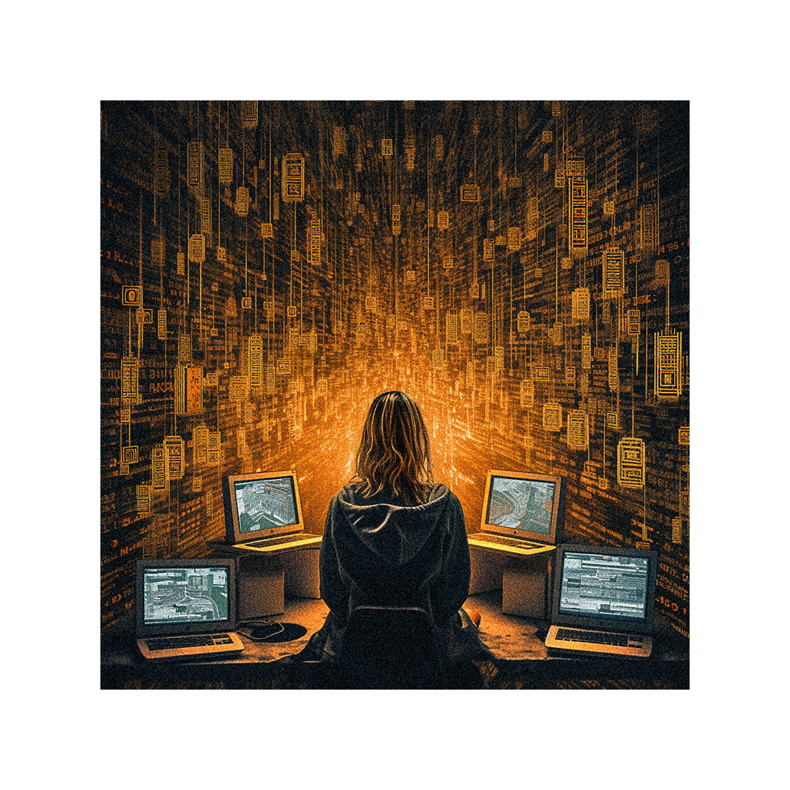

AI’s Impact on Industries and the Economy

Shifting gears to the economic landscape, it’s clear that AI’s influence is far-reaching, becoming a crucial component across various industries, including healthcare, banking, and manufacturing. You’ll notice that businesses globally are investing heavily in AI, with spending expected to reach a staggering $50 billion this year alone. By 2024, that figure could hit $110 billion.

Retail and banking are the frontrunners, but don’t be surprised when the media and government sectors start pouring funds into AI.

As AI disrupts and reshapes industries over the next decade, you’re witnessing a seismic shift. But remember, with great power comes great responsibility. As AI’s economic impact grows, so must our consideration of the ethical implications.

Your role in this landscape, whether as a consumer, creator, or regulator, will make a difference.

Ethical Concerns in AI Decision-Making

You’re now stepping into thorny territory, as AI decision-making isn’t just about economic efficiency but also grapples with severe societal harms and fairness issues.

It’s crucial to understand that AI systems, without proper oversight, can cause more harm than good. Private companies often use AI without government intervention, leaving room for bias and unfair practices. These systems make life-altering decisions about healthcare, job opportunities, and criminal justice, often without ensuring fairness.

The lack of transparency in AI systems is a significant concern. Structural biases can be encoded into these systems, perpetuating existing societal prejudices.

Addressing these ethical concerns is essential; your role in this space is pivotal. So, brace yourself for the challenges and opportunities that lie ahead.

Lack of Oversight in AI Regulation

The landscape of AI regulation is a bit of a Wild West right now, with companies often left to self-police and rely on existing laws and market forces. There’s a lack of consensus on how AI should be regulated and who should set the rules.

Government regulators often lack the technical understanding of AI to control it effectively. The idea of requiring prescreening for potential social harms in every AI product seems impractical and can stifle innovation. Instead, industry-specific panels, armed with a better understanding of AI technology, might provide more thorough oversight.

It’s a complex issue that requires a careful balance between fostering innovation and ensuring ethical practices.

Need for Government Regulation and Technical Understanding

You’ll undoubtedly agree that government regulators need to step up their game regarding understanding and regulating AI. Presently, many regulators lack the technical prowess to oversee this rapidly advancing technology effectively. This deficiency isn’t just a stumbling block; it’s a gaping hole in our societal safety net.

Consider this: with its rigorous data-privacy laws, the European Union is already contemplating a regulatory framework for ethical AI use. The U.S., however, is lagging. It’s time for lawmakers to act with urgency.

Responsibility of Businesses and Education

On top of government regulation, don’t forget the crucial role businesses and education play in addressing AI’s mounting ethical issues. As a leader in your industry, you’re uniquely positioned to influence ethical practices. You can implement internal regulations that prioritize fairness and transparency in AI applications. But it’s not just about the now; it’s also about the future.

Consider the role of education. You can advocate for curricula incorporating ethical AI use, preparing future generations to navigate these complex issues. Encourage continuous learning within your organization, promoting a culture that values ethical decision-making.

It’s not a simple task, but taking responsibility in this way can have a powerful impact on the ethical landscape of AI. After all, change starts with you.

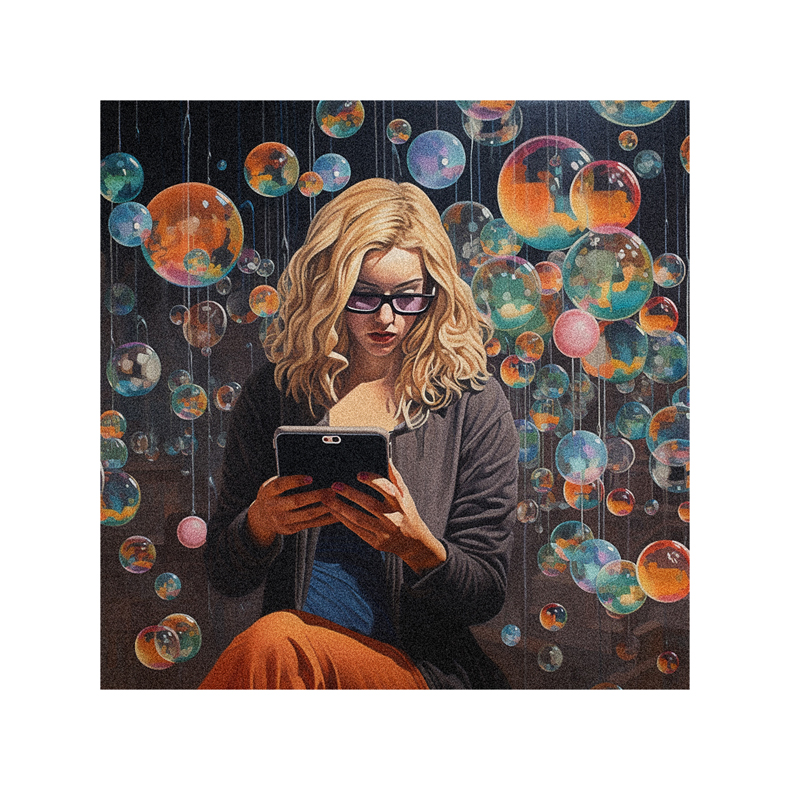

Concerns About Ai-Generated Content

You’re now navigating the complex waters of AI-generated content, where potential pitfalls like harmful content distribution and data privacy violations are ever-present. It’s a tricky terrain, indeed. You’re dealing with potential copyright issues, legal exposure, and the risk of sensitive information disclosure. But remember – mastery comes from understanding and addressing these concerns head-on.

Bias and fairness are another vital part of your journey. AI-generated content can amplify existing bias, and the lack of explainability can make it difficult to ensure fairness. You’re not just creating content; you’re shaping the societal impact of AI.

Concerns About Bias and Fairness

Bias and fairness in AI-generated content might be a hard nut to crack, but it’s a challenge you’re certainly up for. You understand that AI algorithms often amplify existing biases, reflecting the prejudices embedded in their training data. You see the lack of explainability, the inability to interpret why an AI made a particular decision, as a genuine concern. This ambiguity can lead to unfair outcomes, mainly when AI is used in significant decisions.

Your task is to ensure that your AI systems are fair and unbiased. You’ll need to scrutinize the data used for training, question the decisions made by the AI, and continuously strive for transparency. It’s a hefty responsibility, but one you’re ready to shoulder.

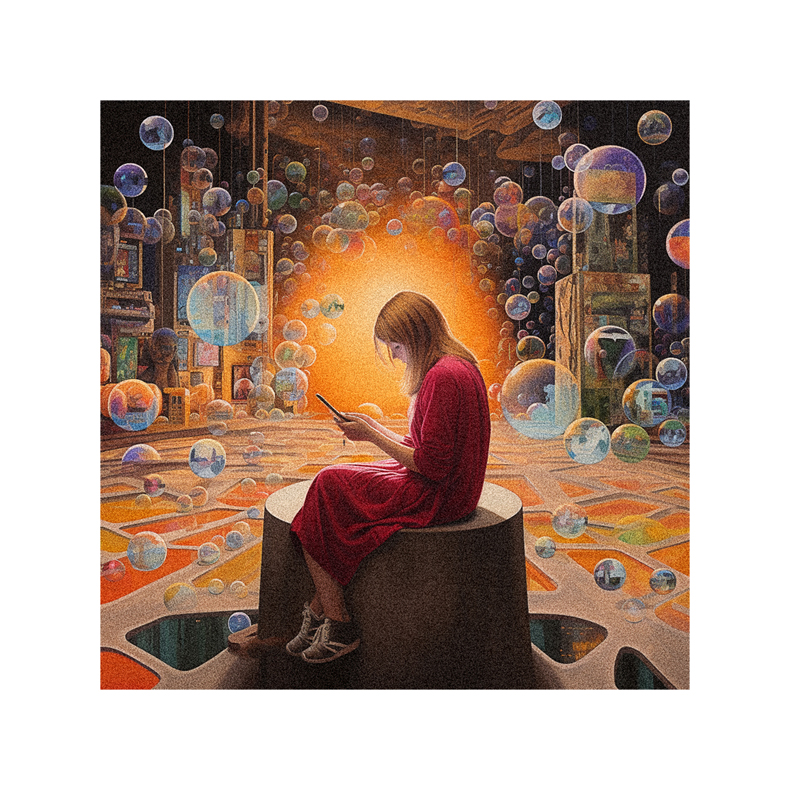

Concerns About Workforce and Societal Impact

It’s crucial to consider how generative AI systems can impact workforce roles and morale and the significant decisions they’re increasingly relied upon to make. You’re navigating uncharted waters here.

As AI systems take on tasks traditionally performed by humans, questions about job displacement and the changing nature of work become more pressing.

These systems can also shape societal norms and behaviors, influencing everything from purchasing decisions to political viewpoints.

However, they’re not infallible. Biases embedded in their training data can lead to unfair outcomes, perpetuating societal inequities. You must monitor these systems, ensuring they’re used responsibly.

Concerns About Data Governance

In generative AI, you must understand and address concerns about data governance, precisely issues surrounding data provenance. You must grasp how AI systems collect, store, and utilize data. Recognizing this helps prevent data misuse or misinterpretation and ensures ethical compliance in your AI projects.

Often, AI models are trained on vast datasets, some of which may contain sensitive information. You’ve got to stay vigilant and implement strict measures to maintain data privacy and confidentiality without compromising the efficiency of your AI system.

Moreover, you must ensure transparency in data handling. This builds trust among users and sets a standard for responsible AI use.

Next Steps in Generative AI Ethics

You’re now stepping into the crucial phase of developing guidelines and regulations in generative AI ethics. It’s essential to ensure that these systems aren’t only innovative but also ethical and fair. As a part of this journey, you’ll need to stay up-to-date with the latest research and technologies while also keeping an eye on the societal implications of your work.

Training and educating AI practitioners is your next step. Equip them with the knowledge and tools to design and implement AI systems responsibly. Encourage them to think critically about the ethical implications of their work, and foster an environment that prioritizes transparency and accountability.

Keep in mind auditing and monitoring generative AI systems is no small task, but it’s vital to maintain ethical standards. Engaging in public dialogue is equally important, promoting understanding and acceptance of AI ethics in the wider community.

Skills Needed for Prompt Engineers

Now, let’s delve into the skills needed for prompt engineers.

As a prompt engineer, you need to have a blend of technical and creative skills.

Technically, it would be best if you were conversant with machine learning concepts, especially natural language processing. You must master tools and languages like Python, TensorFlow, and PyTorch.

Creatively, you should have excellent writing skills and the ability to understand the context and nuances of language.

You’ll also need strong problem-solving skills to navigate the complexities of AI systems.

Additionally, understanding the ethical considerations associated with AI is crucial. This includes being aware of potential biases and ensuring fairness in your models.

Preventing Deepfakes in the Era of Generative AI

Deepfakes, created by generative AI, are a rising concern you’ll have to grapple with, as they can cause severe misinformation and privacy issues. They’re not just a problem for politicians and celebrities anymore; they’re becoming a concern for everyone. It’s increasingly difficult to distinguish between real and fake content as they become more sophisticated.

You must stay vigilant in the digital world, scrutinizing what you consume and share online. The fight against deepfakes isn’t yours alone. Tech companies are developing detection tools, and governments are considering regulatory measures. However, you must understand this technology, its implications, and how to safeguard against it.

Follow us on Pinterest for more tips, tutorials, and artist reviews!